In this tutorial we learn about ways to measure performance of an Algorithm. By measuring performance of an algorithm we can determine which algorithm is better than the other one. Performance of an algorithm is usually represented by the Big O Notation. Follow along and learn more about measuring performance of an algorithm.

I am in a Big problem!

I just received a birthday gift from my elder sister and I want to send a thank you message to her. But, the thing is I am running short on time. I want to get this task done as soon as possible. I’ve listed three ways in which I can solve this problem.

1) Sending a thank you note through post/mail.

2) I could ring her phone or compose an email to convey the message.

3) Or drive to her house and thank her in person.

But, which approach do you think would be fastest?

Approach 2, right? Yes, I was thinking the same.

My end goal of sending a thank you message can be easily accomplished by few clicks or a with one phone call.(Big assumption: Gossip time on the phone not counted)

Similarly, in order to solve any problem in computer science, we write programs. Every program — though can be written in a different programming language — like the one discussed above, can be solved in many ways. In the programming world, we call these ways/solutions as Algorithms.

What is an algorithm?

Oh, yeah, big word alert: What is an algorithm? An algorithm is nothing but a bunch of steps we undertake to achieve some goal, sister getting a thank you message in our above example. Let’s take our third approach to send thank you message to big sister.

Wait, you might be wondering, does that count as an algorithm? Sure it does!

//Rough Steps to Solve our Message Problem

thankYouMessageInPerson(message){

"

1. Start the car

2. Drive to her house

3. Ring house bell

4. Give a big bear hug and thank you message

"

}

thankYouMessageInPerson(‘Awesomest sis in the world’)

Similarly, before jumping to write a program in computer science, we always write an algorithm; a bunch of steps required to accomplish the task or solve the problem.

But, you may ask: We had multiple solutions to our thank you message problem. How do we decide/analyze which algorithm is fastest and best to solve our problem?

Analysis of Algorithm? How do we analyze them?

Before I answer your previous questions, let’s try to solve an immediate issue which popped up. I will get back to how do we go about analyzing algorithm after solving this immediate issue.

Imagine this, you just joined a new development team and were given a piece of code. As you look through the code, you find two methods sort_1 and sort_2. Both these methods take same input in the form of an array of unsorted integer. And the function/method returns a sorted array i.e integers returned are in an order. Without going into code details, we can write the pseudo code as

//Both the functions take an array of unsorted integers as input

function sort_1(array unsortedArrayOfIntegers){

//Some Sorting Logic

return sortedArray;

}

function sort_2(array unsortedArrayOfIntegers){

//Different Kind Of Sorting Logic

return sortedArray;

}

Something like Input Given: An array of unsorted numbers like [8, 2, 10, 1]

Output Expected: Sorted array like [1, 2, 8, 10]

Someone from the team comes and tells you, “Ah, one of this sorting method is faster than the other. But which one I can’t remember. Can you find out for me ?”

Newbies can’t say no. So, time to put on our Testing Caps.

Something which is very clear is that we still don’t know any full proof approach which will give us an accurate result. We might have to do a repeated test to get a good average result.

Measuring performance by measuring time

Test Plan: Let’s create an array of 100 random numbers. Let us call this sort_1 function inside a loop which runs 1,000 times and log time taken to sort numbers. Refer to the below pseudo code:

//generate 100 random numbers and store in a array called unSortedArray

array unSortedArray = randomNumberGenerator(100)

measureStartTime

loop 1000 times {

//calling sort_1 function with an array of random numbers 1000 times

array sortedArray = sort_1(unSortedArray)

}

measureEndtime

//Log the time taken by sort_1 function

timeTakeBySort_1 = measureEndtime - measureStartTime;

measureStartTime

loop 1000 times {

//calling sort_2 function with an array of random numbers 1000 times

array sortedArray = sort_2(unSortedArray)

}

measureEndtime

//Log the time taken by sort_2 function

timeTakeBySort_2 = measureEndtime - measureStartTime;

Test Result: After running this test, you’ll find, log times are pretty close.A difference of few percents maybe. We've got a fair idea how long these functions/methods each take to sort 100 numbers.

Side Note: The small difference in time is because sorting 100 numbers is a fairly small task for machines. Any algorithm you choose, unless extremely inefficient, you will find a difference of a few milliseconds only.

But, did it solve our problem yet? We still can’t figure out which one is fastest or better than other.

Time for Test 2:

Test Plan: Instead of creating 100 random numbers and calling the sort function 1000 times, why not create a single large random number array and call the sort function only once.

//create an array with 1000000 numbers

array unSortedArray = randomNumberGenerator(1000000)

measureStartTime

array sortedArray = sort_1(unSortedArray)

//Log the time taken by sort_1 function

measureEndTime

//Log the time taken by sort_1 function

timeTakeBySort_1 = measureEndtime - measureStartTime;

measureStartTime

array sortedArray = sort_2(unSortedArray)

//Log the time taken by sort_2 function

measureEndTime

timeTakeBySort_1 = measureEndtime - measureStartTime;

Test Result: Ah, this test did the job for us. With the humongous amount of data, you may find that one method beats other. Not just by few percents. Rather by a dramatic huge margin. One method could sort the array within a fraction of seconds and other is still sorting it for us.

I am sure you might be wondering is this even possible?

Factors on Which Algorithm Run time Depends

So, let me break this to you: this kind of result is not at all unusual. And this is one of the major reason and core challenges one faces with algorithm analysis.

The (correct) algorithms we write are neither slow or fast in itself. It would be very easy that way. It also very much depends on how much data we are asking these algorithms to work on.

My Computer Vs. SuperComputer

But, I ran both these tests on my computer. What if, I ran this algorithm on a supercomputer. Will the result still be same?

Factors like the speed of the processor, programming language used to implement it and hardware will drastically impact the run time of the algorithm.

So, now the main question is: how do we go about analyzing/measuring/estimating the time taken by algorithms to run ignoring low-level hardware details and subject to varied input sizes?

(Also, problem fondly known as how do we go about measuring Time Complexity of an algorithm)

Not-So-Boring-Math to the rescue!

Mathematical notation called as Big O Notation/ Big O Complexity at our rescue.

What is Big O Notation?

What the heck is Big O Notation?

In computer science, we use Big O to classify algorithm where we express how quickly the run-time or space requirements grows relative to input, as the input size grows arbitrarily large.

Let me break the definition down into simpler words:

- Measuring Run time/Time Complexity Of An Algorithm:

Sorry, it’s difficult to jot down the exact run time of an algorithm. Why you may ask. Remember, our computer vs supercomputer issue. Different hardware, processor speed affects total run-time of the algorithm. So instead of talking about run time directly, we use Big O to talk about how quickly the run time grows when the data (read: input) given to our algorithm increases.

- Run time could be expressed in terms of seconds, but how do we measure the growth of run time?

Yes, I agree logging time would have been hassle free. But, since, we’re measuring how quickly our run time grows, we need to measure speed in terms of something else.

In Big O, we use the :

- size of the input/data which we denote as “n”

- O stands for the order

So, you’d often find us saying, “Hey, the run time of that algorithm grows on the order of the size of the input i.e O(n)”. Or something like, “on the order of the square of the size of the input i.e O(n²)”.

If all this explanation seems abstract so far, don’t worry. Let’s look at some examples analyzing our code with Big-O notation for more clarity.

Real Life Big O:

When analyzing algorithms/operations, we often consider the worst-case scenario. What’s the worst that can happen to our algorithm and when does our algorithm will do some serious heavy-lifting work?

Let’s say you have a cabinet full of files. You may have many tasks like finding files, getting rid of duplicates etc. You also know that if there are more files in the cabinet, longer it will take you to accomplish your task.

Assuming we’ve to perform some of the task mentioned above. Here are few scenarios and ways in which we can find the file from the cabinet and their corresponding order of notation(operation’s time complexity).

O(n) — Linear Time :

Scenario/Problem: Let's say we want to find a particular file in the cabinet. But, the files stored in the cabinet are not sorted or alphabetized. How can we search for our file?

Approach: We will have to look at each and every file present in the cabinet till we can find our file.

function searchForFile(filesArray, fileName){

//We loop through every file till we find what we're searching for

for (let i=0 ; i < filesArray.length; i++){

if (filesArray[i] == fileName)

return true

}

}

//Name of files present in the cabinet

filesArray = ['Abra', 'Snitch', 'Falana', 'Dinkana']

searchForFile(filesArray,'Snitch')

Worst-Case Scenario & Complexity Explanation:

- In the worst case scenario, we will have to look at n files. (n is highest no of files present in the cabinet)

- The number of steps we take is directly related to your input size. Means if the cabinet had 10 files, we have to look at all 10 files till we find our file in our worst-case scenario.

- Hence, we say, the run-time of above algorithm grows on the order of the size of the input i.e O(n).

- Single for loops, linear search are examples of linear time

O(1) — Constant Time :

Scenario/Problem: You need a file and remember exact location where you had kept the file.

Approach: Open the cabinet, pick the file from the location. End of the story.

function searchForFile(filesArray, fileName){

return filesArray[1]

}

//Name of files present in the cabinet

filesArray = ['Abra', 'TheSnitch', 'Falana', 'Dinkana']

searchForFile(filesArray,'TheSnitch')

Worst-Case Scenario & Complexity Explanation:

- *The above method runs in O(1) time relative to its input. *

- The input array could be 1 item or 1,000 items, but since we know where our element resides, retrieving it would just require one “step”.

- Picking element by array index is an example of constant time.

O(n²) — Quadratic Time :

Scenario/Problem: You’ve duplicate files in the cabinet and you want to remove them. But, you’ve no idea how many duplicate copies of each file lies in the cabinet.

Approach: We will have to pick the first file, check it against all the other files. Then take file number 2 and repeat the same action until you got to the last file.

function removeDuplicate(fileArray) {

for (let i = 0; i < fileArray.length; i++) { //has to go through each file in the cabinet

for (let j = i + 1; j < fileArray.length; j++) { //and compare each file you pick with others

if (fileArray[i] == fileArray[j]) {

var index = fileArray.indexOf(fileArray[i]);

if (index > -1) {

fileArray.splice(index, 1);

}

}

}

}

return fileArray

}

fileArray = ['Abra', 'Snitch', 'Abra', 'Falana', 'Dinkana']

removeDuplicates(fileArray) //returns fileArray without duplicates

Worst-Case Scenario & Complexity Explanation:

- In the worst case scenario, we will have to look n² times, comparing each and every file with the others.

- Each pass to outer loop O(n) requires going through entire array through the inner-loop which is another O(n) operation.

- Nested for-loops are an example of quadratic time complexity

O(log n) — Logarithmic time :

Scenario: The files in the cabinet are in the order. We’ve to search a file marked with a label.

Approach: We can start in the middle, see in which half should be in, go to the middle of the half and repeat the steps until we found our file.

//There are a bunch of other checks that should go into this example for it to be truly functional,

// but not needed for this explanation

function searchForFile(array, fileNo){

var midPoint = Math.floor( array.length /2 );

if( array[midPoint] === fileNo) return true;

if( array[midPoint] < fileNo) // only look at second half of the array

if( array[midpoint] > fileNo ) // only look at first half of the array

//recursively repeat until you arrive at your solution

}

fileLabelArray = [10, 20, 30, 40]

searchForFile(fileLabelArray, 30 )

//Input given to the function are in order i.e sorted.

- When our files were not ordered, we had to see each and every file resulting in an O(n) time complexity.

- But, since the files are ordered, here, given an input of size n, the number of steps it takes to accomplish the task are decreased by roughly 50% each time through the algorithm. (If you do the math, it works out to be an O(log n) operation)

- O (log N) algorithms are very efficient because increasing amount of data has little effect at some point early on because the amount of data is halved on each run through.

- Recursive algorithm like binary search is an example of logarithmic complexity.

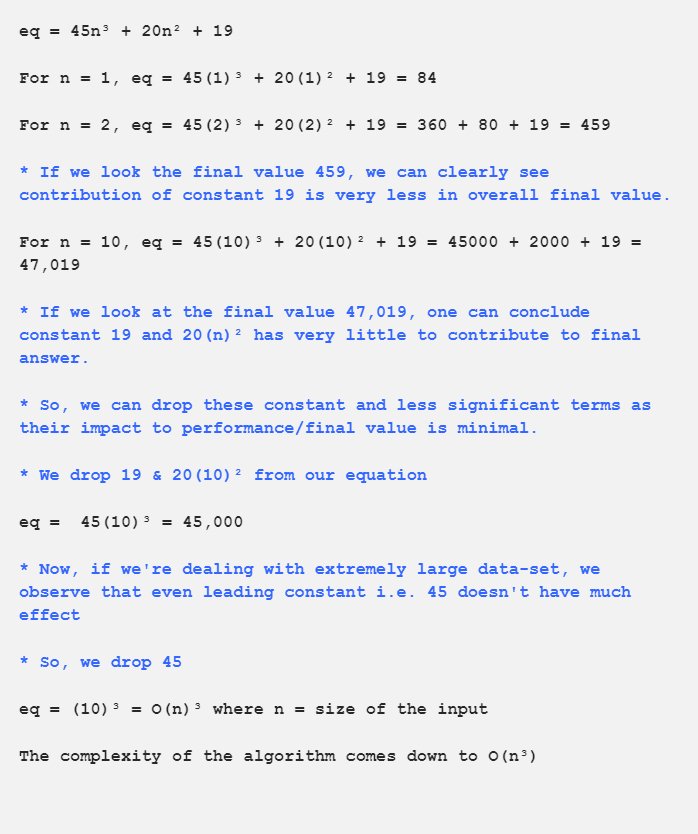

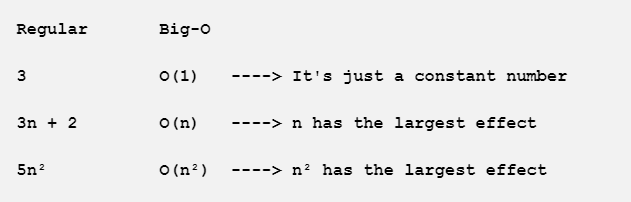

Just drop the constants & less significant terms:

Let’s take a small algorithm where we’ve to calculate the complexity of the equation by throwing away:

- Leading constants

- Less significant terms.

Why we do this?

If you can remember or scroll back to the definition of Big O, there’s a term in bold which states that when input size(n) grows arbitrarily large.

As n gets really big, adding 200 or dividing by 10 has a decreasingly significant effect. So, we can safely throw these terms out. We only concentrate on a principal activity which has the most impact.

What is Space Complexity?

(I know, I know this is the biggest Big O definition explanation in the history of mankind. This is the final concept I promise! )

We need to analyze or care about memory cost(space complexity) just like we did for our precious time. Best part there isn’t much difference between them.

Space complexity is space taken by algorithm to complete its algorithm relative to its input size.

Space Complexity = Constant Space + Auxiliary Space

- Constant Space is generally fixed for an algorithm. Think of the space taken by local variables and input variables.

- Auxiliary Space is extra/temporary/additional space used by an algorithm. Mostly temporary variables.

Let’s see few code examples to simplify our definition:

O(1) Space Complexity :

//Our function takes three integer parameters x, y & z and returns sum of three numbers

function sum(x, y, z) {

var r = x + y + z;

return r;

}

We know, Space complexity = Constant Space + Auxiliary Space

Constant space is local variables & inputs i.e variable x, y, z and r in our case.

Auxiliary Space is for the temporary variable. Not used in above example. We consider 1 unit for each variable present in our algorithm

Space complexity = 1 + 1 + 1 + 1 + 0 = 4 = O(1)(remember, our constant time Big O notation?)

O(n) Space Complexity:

//Our function sum accepts an array with 4 elements & second parameter gives length of an array

function sum(a, n) {

var r = 0;

//variable i is our temporary variable

for (var i = 0; i < n; ++i) {

r += a[i];

}

return r;

}

sum([1,2,3,4], 4)

Now, above method has an input of array and another input gives size/length of that array. An array has n elements, each will hold 1 unit of memory. So space taken by an array is (1n) where n = number of elements in the array

Constant space = Space taken by array + variable n + variable r

Auxiliary Space = Space taken by temporary variable i

Space Complexity = (n * 1) + 1 + 1 + 1 = O(n)

This is awesome except:

Big O is a powerful tool, but one should use it wisely. Sometimes there’s a tradeoff between saving time and saving space, so one has to take a crucial decision on what needs to be optimized.

Like Big O royally ignores constants and less significant terms. But, sometimes they matter too. Imagine, if we have got a script that goes on for 5 hours, an optimization that divides the runtime by 10 might not affect Big O, but it can still save us few hours of waiting. Also, beware of premature optimization.

Hence, it is rightly said that one must develop the skill to optimize time and space complexity as well as be wise enough to judge if these optimizations are worthwhile.

Closing Notes:

- Big O time and space complexity usually deal with mathematical notation. This article aimed at covering the topic in simpler language, more by code and engineering way. Feel free to check out pure mathematical notation here

- Also, to solve our time complexity problem, we’ve three types of Asymptotic notation.1) Θ Notation 2) Big O Notation 3) Ω Notation. We looked at Big O as it is most widely used asymptotic notation. And also, it deals with worst-case, something we need in efficiency tradeoff.